Gradient Boosted Trees¶

Description |

Gradient boosting is an approach to machine learning which takes a simple, weak model and iteratively adds to it, reducing the error. This has proven to be very effective with decision trees, often with results rivaling deep learning, but with less need for tuning. Most projects provide Scikit-learn compatible APIs. If you wish to try out various classification (or regression) algorithms, you can compare against the various algorithms provided by scikit-learn without changing your code. |

|---|---|

Projects |

3 |

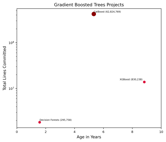

Lines Committed vs. Age Chart (click to view) |

|

Projects¶

Project |

Size Score |

Trend Score |

Byline |

|---|---|---|---|

9.25 |

5.75 |

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU. |

|

3.25 |

5.5 |

A collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models in Keras. |

|

6.25 |

5.25 |

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Flink and DataFlow. |